If you are reading this, am sure you are trying to get into the mystery world of AI and puzzled by the various terminologies of the AI world.

- AI Model

- GPT - Generative Pre-trained Transformer

- LLM - Large Language Model

- FM - Foundation Model

- Text Generation

- Text Summarization

- Transformers

- Embeddings

In this article, I am trying to clarify a few things and give a brief introduction and summary of these terminologies through my understanding of the real world. To make things simple, we are going to take the Kids/Human Learning model as our analogy

Who should read this

If you are a total beginner who wants to understand the basics of AI with the comparative study of real-world circumstances and experiences. I am trying to narrate this article in a simple context to make sense to all types of people in common ( engineers, students, non-engineer or ai aspirants or simply personal interests)

If you are here looking for that great article that talks about LLMs and MLs in deep with great intellectual real life examples sorry this is not that kind of article. I can recommend you to read few articles on the further read section at the end of this article. so you can feel free to scroll down.

Lets begin

Artificial Intelligence - Potential Kid

Artificial intelligence is a way of enabling machines such as computers and other machines to think and act like humans. We as a kid growing up learn a lot of things and we still do thats what distinguishes us from one another, and our intelligence varies based on exposure and our own interests.

For example, A kid born and brought up in a city would have a different set of intelligence than their peer coming from a village. I am not saying the village kid lacks intelligence, He is advanced in some other skills and intelligence.

I am that village kid coming from down south India who never had a chance to touch a computer until I landed my first job in a city you might be familiar with (Chennai, India)

After I got a chance to expose myself to computers every day, I learnt a lot and down the line after 14 years am writing this article.

so, at the end of the day, our intelligence/skill is based on the exposure

Why am I talking about exposure to Artificial intelligence? you might think

Well, In the same analogy, artificial intelligence is like a kid with a lot of potential and what makes it great is the exposure. Here exposure means the billions and trillions of data we are feeding to artificial intelligence to understand the world and universe better.

What makes AI a potential threat to us ( humans ) is that. they have less drama, emotions, and narrow focus and their time is not limited like ours.

Despite we feed the data to Artificial intelligence. How can AI understand?, How would AI perceive things or events? How does AI learn from it?

let's try to solve these mysteries

AI Model vs Kids/Human Learning Model

If I say, Model, am sure you might think or try to relate to various things in computer science or engineering or life in general.

When you hear a word. like Model in this case, your brain tries to understand what is it and try to relate it to something you may have experienced before.

Let's say, you do not know what swimming is, as an adult If I ask you to jump into the well with no safety would you do it? I would not either if I were you

Why not ?. its simply because of the fear of drowning

If I give you a piece of raw bitter guard or lemon and say munch it like chocolate would you do that? No. It is because we experienced the taste before

So we make decisions based on your experience.

If I say write a program to take a number as an input and see if it's a prime number? if you already learnt it you will write it. but if you are not exposed to or trained in computers and programming you cannot do that

So our learning and exposure helps here

Until recently I had not learned how to drive a car, I still learned to be a better driver but I still remember the first day of driving school and I almost told myself the car was not for me, but I learned it after a lot of practice and failures

Even if you learn to drive a car can you go and drive an F1 car and be the racing champion? It needs training and N number of hours spent to master the skill

Why am I saying all this? the way we learn is determined by the following things

- exposure

- experience

- practice

- failures

- learnings and learning methods

- time spent on practising a skill - training

- our limitations and boundaries we set for ourselves (or) set for ourselves by someone

- our Perceptions like Inferiority complex, imposter syndrome etc.

So considering AI as a kid we need to train it and guide it so that it can grow as an Intelligence

In our analogy of kids, If you are a father/mother or had a chance to see the early growth stages of a kid, they learn these three major things

- Vision - Learning to identify colours, objects, animals, people etc

- Language - Learning to speak and understand the language we teach

- Motor skills - Learning to hold a pen, draw a circle, build blocks, kick, punch whatever we expose them to.

some parents choose to expose their kids very early to many life skills such as Swimming, acrobatics, football and many more.

So all of these stages and the methodologies we use to teach a kid these primary skills can be considered as models A set of principles for teaching your kid or AI what you want to teach

When it comes to AI - we can call it as AI model and it relies on the same three things

- Computer Vision - Identifying Objects, colours, real world pictures etc (DallE)

- Language - Natural Language Processing (NLP, ChatGPT)

- Machine Learning - Perfectly Curated models to train our AI with Text transformation, Voice recognition, Programming, Current and past affairs in the world, history and so on and so forth

If you have been around earlier growth stages of kids, you might have seen parents asking their kids to identify objects and colours.

We ask Can you touch red color and they do it right based on their skill or may be wrong. if they touch wrong we correct them and try once again

Eventually, they will get it. The same happens with Shapes and Animals.

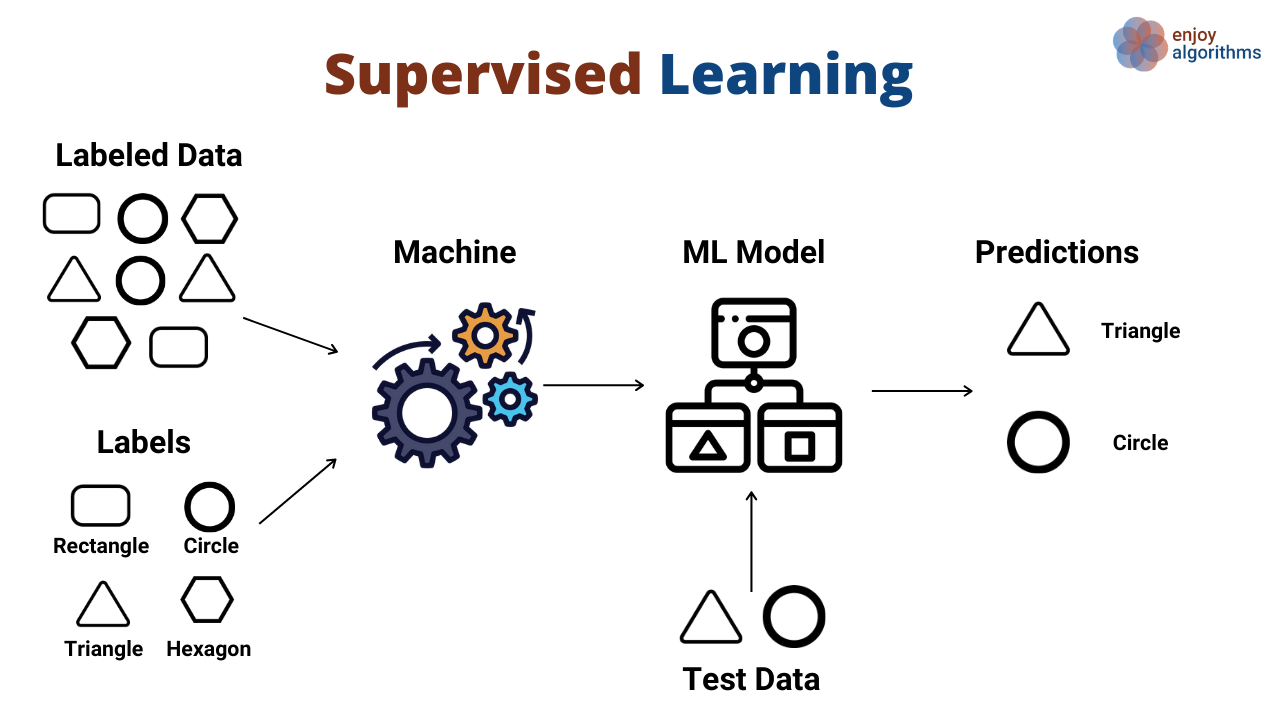

Here is an interesting image comparison to represent how close the AI model and Kids Learning model are.

Kids learning to identify and arrange shapes

AI ML model to predict the shapes and arrange

Do you find it relevant ?. so my analogy on comparing the kid with AI is somewhat working out.

So, I hope you now understood the AI model is a set of training methods and strategies we apply to train AI with what ever the essential skills we want our AI to learn.

If you have been a parent or around the kids, You might have wondered in various places. How and where did my kid learn it

It can be good or bad but they learnt it from somewhere. but it is not taught by you,

Having said that, I categorize the learning of Kid into three

- Taught by you

- Not Taught by you but they learned somehow

- You taught them something and they figured out the rest

applying that same analogy to the AI model, there are three machine-learning models

- Supervised - Taught by you

- Unsupervised - Not Taught by you but the learn

- Semi-supervised - You Teach something and they figure out the rest

Besides the category of the AI Models based on their training style, there are a lot of tools and Algorithms available curated to teach various things

We have a Vocal training, Animals book, Colors book, Lego Tower, Building Blocks, and Shape blocks to train kids

we have a lot of training models to teach our AI different sets of skills. I have listed them down

- Statistical Models:

- Linear Regression: Used for modelling the relationship between a dependent variable and one or more independent variables.

- Logistic Regression: Applicable for binary classification problems.

- Decision Trees: Used for classification and regression tasks by recursively splitting data based on features.

- Random Forest: Ensemble method that combines multiple decision trees for improved performance.

- Support Vector Machines (SVM): Effective for both classification and regression tasks.

- Naive Bayes: A probabilistic model for classification tasks based on Bayes' theorem.

- Neural Networks:

- Artificial Neural Networks (ANN): Multilayer perceptrons with input, hidden, and output layers used for deep learning tasks.

- Convolutional Neural Networks (CNN): Specialized for image and video analysis, utilizing convolutional layers.

- Recurrent Neural Networks (RNN): Designed for sequential data, such as time series and natural language processing.

- Long Short-Term Memory (LSTM): A type of RNN with enhanced memory for sequential data.

- Transformers: Highly effective for natural language processing tasks, featuring attention mechanisms.

- Clustering Models:

- K-Means Clustering: Groups data points into clusters based on similarity.

- Hierarchical Clustering: Forms a hierarchy of clusters by iteratively merging or dividing them.

- DBSCAN: Density-based clustering algorithm that identifies clusters with varying shapes and densities.

- Dimensionality Reduction Models:

- Principal Component Analysis (PCA): Reduces the dimensionality of data while preserving its variance.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): Visualizes high-dimensional data by reducing it to a lower dimension.

- Natural Language Processing Models:

- Word Embeddings (e.g., Word2Vec, GloVe): Represent words as continuous vectors for various NLP tasks.

- BERT (Bidirectional Encoder Representations from Transformers): Pre-trained model for a wide range of NLP tasks.

- GPT (Generative Pre-trained Transformer): Generates human-like text and language understanding.

- Seq2Seq (Sequence-to-Sequence): Used for machine translation and other sequence generation tasks.

- Reinforcement Learning Models:

- Q-Learning: A model-free reinforcement learning algorithm for decision-making in discrete-state and discrete-action environments.

- Deep Q-Networks (DQN): Combines Q-learning with deep neural networks for complex tasks.

- Policy Gradients: Directly learns policies for continuous action spaces.

- Probabilistic Models:

- Hidden Markov Models (HMM): Used for modelling sequences with hidden states.

- Bayesian Networks: A probabilistic graphical model for representing uncertainty.

So here is an interesting fact

Chat GPT - Is trained with GPT (Generative Pre-trained Transformer) AI model and the GPT in its name may represent the Generative Pre trained Transformer model

Comparing Kids Learning model and AI Model techniques

Kids Learning Vision (Kids Learning Model) vs. Computer Vision (AI):

Just as kids learn to recognize and understand objects and their surroundings, Kid Learning Models are designed to develop a vision of the world through language. They can "see" and comprehend the meaning of words and concepts, much like how computer vision in AI enables machines to interpret and make sense of visual information from images and videos.

Kids Learning Playtime (Kids Learning Model) vs. Reinforcement Learning (AI):

Kids learn and grow through play, exploration, and feedback. Similarly, Kids Learning Models use reinforcement learning techniques to adapt and improve based on feedback and experiences. They can "play" with language, understand the consequences of their responses, and continually refine their language skills.

Kids Learning Stories (Kids Learning Model) vs. Text Generation (AI):

Kids often learn from stories and narratives. Kids Learning Models are adept at generating stories, explanations, and educational content in an engaging and child-friendly manner. This is analogous to text generation in AI, where machines can create narratives, articles, and content based on the input and context they receive.

Kids Learning Simplification (Kids Learning Model) vs. Text Summarization (AI):

Just as children benefit from simplified explanations, Kid Learning Models can summarize complex content into child-friendly versions. This aligns with the concept of text summarization in AI, where lengthy articles or information are condensed into concise summaries that are easier to understand.

Kids Learning Language Skills (Kids Learning Model) vs. Natural Language Processing (AI):

Kids develop language skills as they grow, and learn to understand and communicate. Kids Learning Models are specialized in natural language processing for children, enabling them to comprehend and generate text in an age-appropriate and understandable way. This mirrors the broader field of natural language processing in AI, where machines work with human languages to perform various language-related tasks.

Comparing ChatGPT and BERT with the Kid Learning Model

LLM - Large Language Model:

Example: ChatGPT

Analogy: Think of Large Language Models (LLMs) as advanced stages of kids' language development. Just as children grow their vocabulary and linguistic abilities over time, LLMs are like "language prodigies" in AI. They have extensive knowledge of language, can generate sophisticated text, and are skilled at understanding and communicating complex concepts in a way that resembles how older kids or teenagers become more articulate and knowledgeable in their language use.

Description: ChatGPT is a Large Language Model developed by OpenAI. It's designed to understand and generate human-like text, making it suitable for conversational AI applications. ChatGPT can chat with users, answer questions, and provide text-based interactions, making it a valuable tool for a wide range of natural language understanding and generation tasks.

FM - Foundation Model:

Example: BERT (Bidirectional Encoder Representations from Transformers)

Analogy: Foundation Models (FMs) serve as the educational foundation for Kids Learning Models. FMs are like the basic building blocks of knowledge and skills, similar to the early years of a child's education. They provide the fundamental understanding and abilities that Kids Learning Models build upon to cater to the learning needs of young users.

Description: BERT is a widely used Foundation Model in the field of natural language processing. It forms the basis for various downstream NLP tasks, such as text classification, question-answering, and language understanding. BERT's pre-trained representations of language serve as the foundation for many language models and applications.

Transformers:

Example: GPT-3 (Generative Pre-trained Transformer 3)

Analogy: Transformers in Kids Learning Models can be compared to the "learning gears" that help children adapt and evolve in their education. Just as children transition from one grade to the next, Transformers enable Kids Learning Models to adjust their language understanding and capabilities to meet the evolving needs of young learners, much like how children advance from one educational level to the next.

Description: GPT-3 is a well-known example of a Transformer-based model. It's designed for a variety of language tasks, including text generation, translation, summarization, and question-answering. Transformers like GPT-3 have revolutionized natural language processing by enabling powerful and versatile language understanding and generation capabilities.

Embeddings:

Example: Word2Vec

Analogy: Embeddings for Kids Learning Models are like the "knowledge connectors" in children's education. These connectors help Kids Learning Models understand how words and concepts relate to one another, much like how children learn how different pieces of knowledge fit together in a cohesive understanding of the world. Embeddings are the glue that holds language and concepts together for these models.

Description: Word2Vec is an example of word embeddings. It represents words as continuous vectors in a high-dimensional space, capturing semantic relationships between words. Word2Vec is used in various NLP applications, enabling machines to understand and work with words based on their embeddings, much like how kids learn word associations and meanings as they grow.

Top LLMs - Paid and Open Source

Since its introductory article, I want to touch upon a few myths around LLMs. I am sure you might have heard or used ChatGPT as an LLM but it's not the end of the world. there are more LLMs available which you buy or self-host and Open Source too.

Paid LLMs

Open Source LLMs

If you looking to find the latest Open Source LLMs check out the OpenLLM Leaderboard by Hugging Face

Conclusion

This is my thought process and narrative of how I compare and study AI with Kid learning model and it may not be accurate but I hope it sets the context and helps you understand the AI concepts and terminologies in simpler terms.

Feel free to let me know your feedback through comments.

Share this article if you find it worthy.

Cheers

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content