We launch the pods and wait for them to come to a running state but sometimes the pod goes to CrashLoopBackOff state.

The Reason could be a mere Capacity issue or an OutOfMemory situation could have happened. which you can find when you describe the crashing pod using kubectl describe

But sometimes, It would be a clear application issue where the application/container exit too quickly when you describe the pod you would not get any clue.

In such cases, what do you do? There is a two steps process, let us see what is it and how it can help

Let us start with our first step, checking the logs of the crashed pod

Check the logs of the crashed pod with --previous option

The first thing we can do is check the logs of the crashed pod using the following command

$ kubectl logs <podname> -n <namespace> – previous

If the pod is multi-container you can use the following command, to explicitly instruct the container name with -c

$ kubectl logs <podname> -n <namespace> – previous -c <container_name>

Launch the POD with Customized CMD or EntryPoint for an Image

the second thing is the objective of this article, which is to use the same image and launch it with the customized startup command

Let us suppose, My Dockerfile is like this

FROM node:18-alpine WORKDIR / RUN mkdir /app COPY ./ ./app WORKDIR /app CMD ['node','index.js']

When I create a deployment in Kubernetes, By default the base command(CMD/EntryPoint) I have defined in CMD section would be run to start the container.

But Kubernetes let us run the same Docker image, with different startup command and arguments, Irrespective of whatever

CMDwe have defined

If you have some experience with Docker EcoSystem, It is more like the following docker command

$ docker run -it – entrypoint=/bin/bash $IMAGE -i

Yes, we are defining a custom EntryPoint or CMD while running the same image we built with a different entry point.

Well, Can we use the same strategy and launch /bin/bash or /bin/sh or any other shell with our image and test it. Yes, we can do that.

This is what I do to exec or SSH into my crashing POD and troubleshoot why it is failing to launch or resulting in CrashLoopBackOff

It is one way to solve the mystery of Crash Loop Back Off. This is the equivalent command to what we did in Docker.

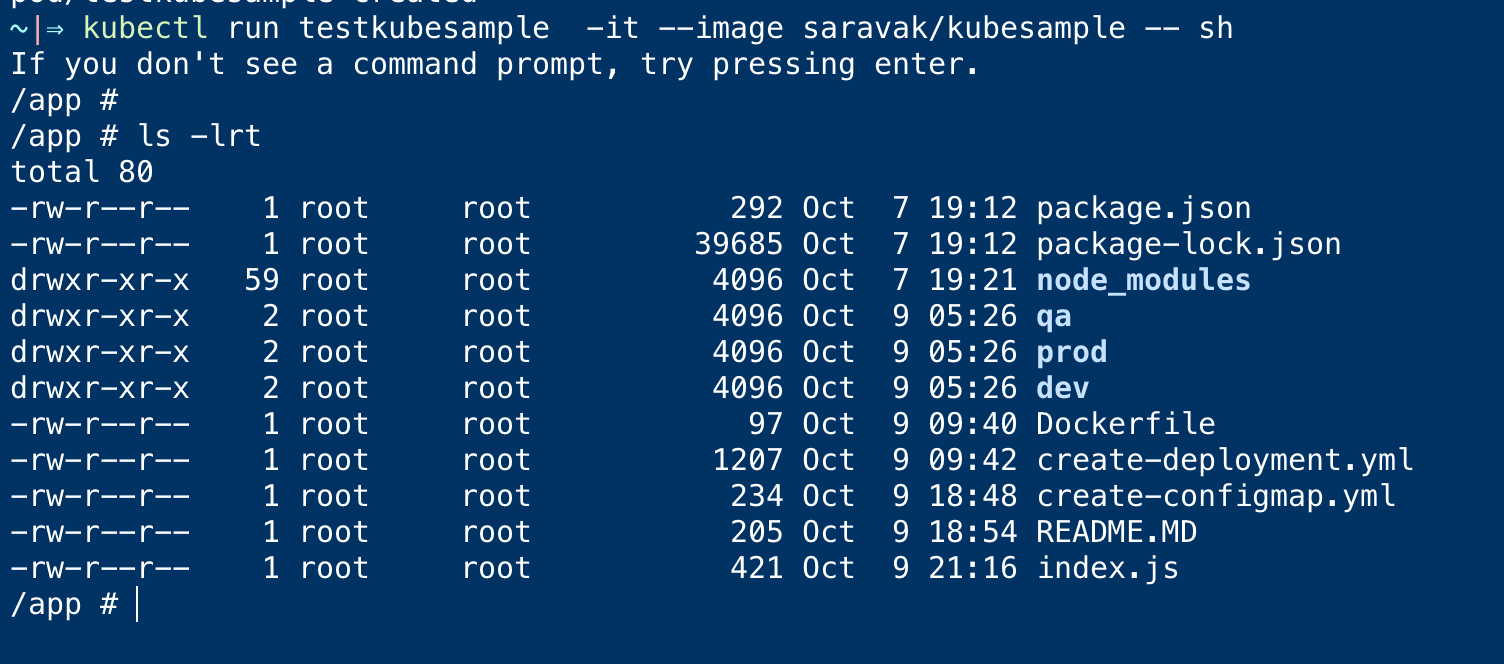

$ kubectl run testkubesample -it – image saravak/kubesample – sh

This command launches our image with the custom command, in my case it is sh .

Since we have give -it as an option it would be started as an interactive terminal right into the newly launched pod.

Even if you come out of the Terminal or session the pod would remain running in the background.

You can use either kubectl attach or kubectl exec commands to get into the POD once again.

Here are. the commands you can use

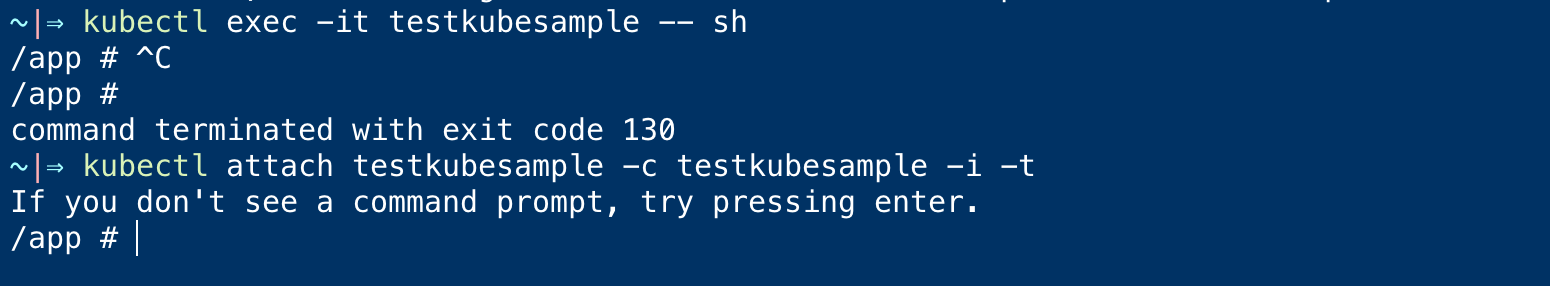

$ kubectl exec -it testkubesample – /bin/sh

Or

$ kubectl attach testkubesample -c testkubesample -i -t

Here is a screenshot of me trying these commands at my end

If you do not want an interactive terminal, you can simply remove the -it option

$ kubectl run testkubesample – image saravak/kubesample – /bin/sh

Without the -it option the pod gets created in the background, so you need to get into the pod with exec or attach as we did above

Now you know the Logs and a way to SSH/EXEC into the failing or crashing POD. You can easily find out what is wrong.

There is one more way to go about this.

Launching a Deployment with Customized Command option

Earlier we have seen how to launch our image as a POD with interactive and non-interactive options and continue our troubleshooting.

What if you already have a deployment which you want to debug?

Kubernetes Deployments also support custom commandsand entry point defined

For example, Refer to the following deployment YAML file with custom commands defined

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "4"

labels:

app: kubesample

name: kubesample

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: kubesample

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

kubectl.kubernetes.io/restartedAt: "2022-10-09T01:23:13+05:30"

creationTimestamp: null

labels:

app: kubesample

spec:

containers:

- command:

- sh

- -c

- while true; do sleep 1000; done

image: saravak/kubesample

imagePullPolicy: Always

name: kubesample

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

If you look closely this is the command we are using with the same image

sh -c while true; do sleep 1000; done

This command just keeps our deployment and pods running so that we can debug, its a simple while loop that sleeps forever

You can edit your deployment and add this command section to your existing container and restart the deployment and start your debugging

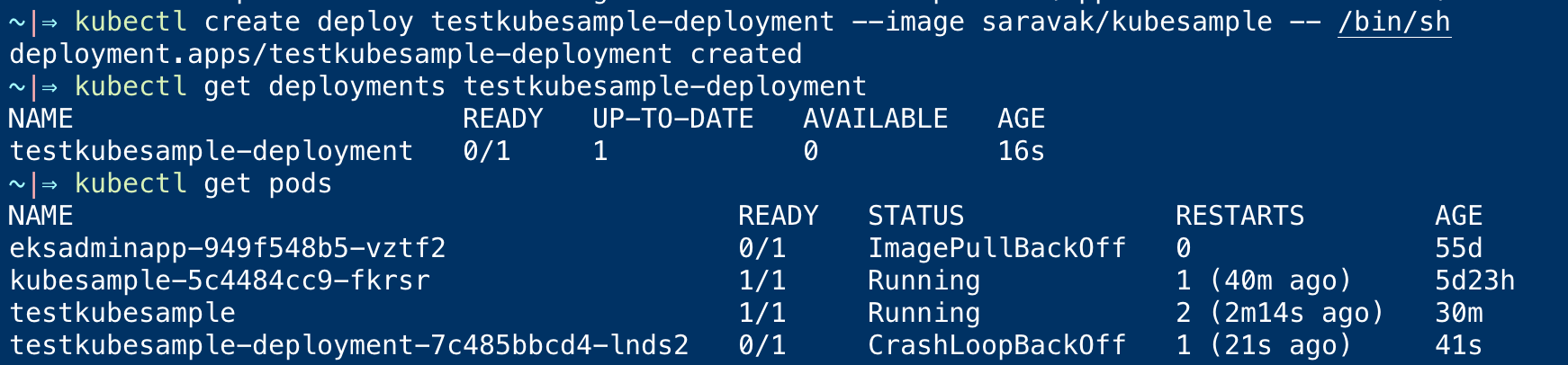

You can do it from the command line too as we did for kubectl run

But Since the deployment maintains the state, you need to make sure that the pod/container is running so you cannot simply use sh as the command

This is what would happen if you try so, It would fail to stay alive and cause CrashLoopBackOff

The right command to keep your deployment running with the custom command as follows

$ kubectl create deploy testkubesample-deployment – image saravak/kubesample – /bin/sh -c "while true; do sleep 1000; done"

The same command we have used earlier with the while loop, just being passed over the imperative command line

Once. you have launched the deployments and pods you can EXEC/SSH into them for your debugging

Thanks

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content