AWS CLI has become a life saver when you want to manage your AWS infrastructure efficiently.

This also provides lot of possibilities for Automation and reduce the number of times that you have to login to AWS Management console.

If you are new to AWS CLI. Please consider reading this article on how to setup AWS CLI

With no further ado, Lets get into the objective of this post.

In this post we are going to talk about a very specific command of AWS CLI which is AWS S3

While AWS S3 provides complete tool set to manage your S3 bucket. we are going to see one specific feature of S3 CLI today. which is copy

Before we get there. we need to understand few things about AWS S3 CLI

There are two AWS S3 CLI commands available

- AWS S3

- AWS S3API

Let us see what they both have to offer.

What is AWS S3 and S3API

The AWS CLI provides two tiers of commands for accessing Amazon S3

The s3 tier consists of high-level commands that simplify performing common tasks, such as creating, manipulating, and deleting objects and buckets.

The s3api tier behaves identically to the aforementioned S3 tier but it enables you to carry out advanced operations that might not be possible with s3 tier.

In this article we are going to talk about only the s3 tier and very specifically s3 cp command which helps us copying files from and to S3 buckets.

Before going any further I want you to know few handy commands which help to list the buckets.

aws s3 help- To get a list of all of the commands available in high-level commands.aws s3 ls- To get the list of all buckets.aws s3 ls s3://bucket-name- Will list all the objects and folders I that bucket.aws s3 ls s3://bucket-name/path/- This command will filter the output to a specific prefix.

Quick Caveats on AWS S3 CP command

- Copying a file from S3 bucket to local is considered or called as

download - Copying a file from Local system to S3 bucket is considered or called as

upload - Please be warned that failed uploads can't be resumed

- If the multipart upload fails due to a timeout or is manually cancelled by pressing CTRL + C, the AWS CLI cleans up any files created and aborts the upload. This process can take several minutes.

- If the process is interrupted by a kill command or system failure, the in-progress multipart upload remains in Amazon S3 and must be cleaned up manually in the AWS Management Console or with the s3api abort-multipart-upload command.

AWS S3 CP Command examples

Here we have listed few examples on how to use AWS S3 CP command to copy files.

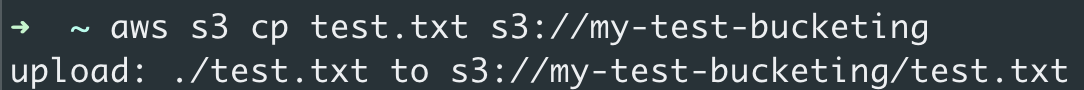

Copying a local file to S3

Uploading a file to S3, in other words copying a file from your local file system to S3, is done with aws s3 cp command

Let's suppose that your file name is file.txt and this is how you can upload your file to S3

aws s3 cp file.txt s3://bucket-name

while executed the output of that command would like something like this.

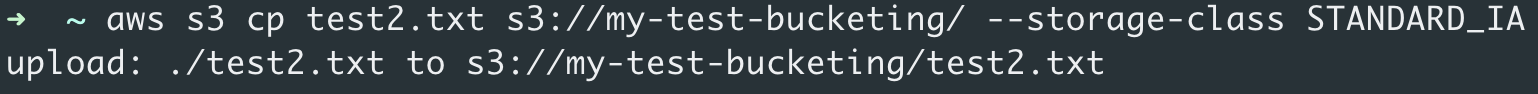

Copying a local file to S3 with Storage Class

S3 Provides various types of Storage classes to optimize the cost and to manage the disk efficiency and IO performance during file read and write operations.

- S3 Standard

- S3 Intelligent-Tiering

- S3 Standard-IA

- S3 One Zone-IA

- S3 Glacier

- S3 Glacier Deep Archive

You can read more information about all of them here

aws s3 cp file.txt s3://bucket-name – storage-class class-name

Console Output:

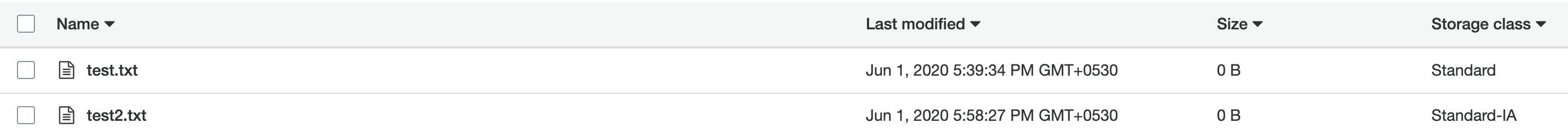

In the prceding snapshot you can see that the test2.txt file which we have uploaded just now is showing the Standard-IA as the storage class

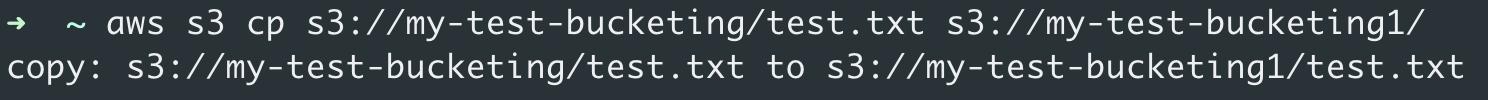

Copying an S3 object from one bucket to another

At times we would want to copy the content of one S3 bucket to another S3 bucket and this is how it can be done with AWS S3 CLI.

aws s3 cp s3://source-bucket-name/file.txt s3://destination-bucket-name/

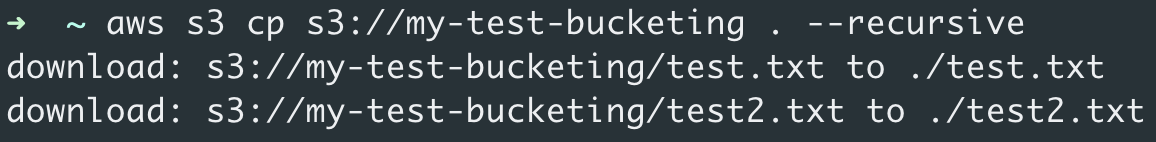

How to Recursively upload or download (copy) files with AWS S3 CP command

When passed with the parameter --recursive the aws s3 cp command recursively copies all objects from source to destination.

It can be used to download and upload large set of files from and to S3.

Here is the AWS CLI S3 command to Download list of files recursively from S3. here the dot . at the destination end represents the current directory

aws s3 cp s3://bucket-name . – recursive

the same command can be used to upload a large set of files to S3. by just changing the source and destination

aws s3 cp . s3://bucket-name – recursive

Here we have just changed the source to the current directory and destination to the bucket and now all the files on the current directory(local) would be uploaded to the bucket.

It includes all the subdirectories and hidden files

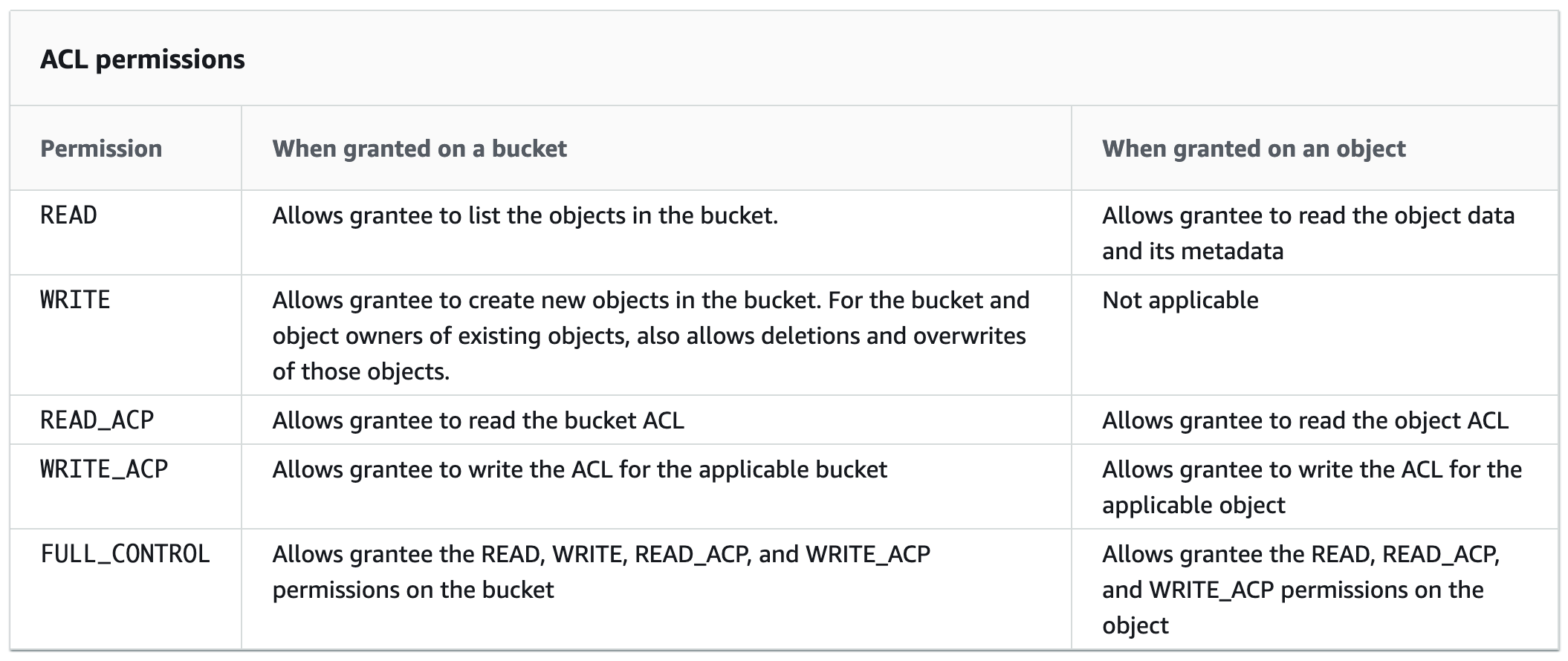

Setting the Access Control List (ACL) while copying an S3 object

For security and compliance reasons, we might want to restrict the files that we are copying to S3 buckets with set of Access control. Like Read only or Read Write etc.

Here is the command that copies a file from one bucket to another bucket with specific ACL applied on the destination bucket ( after copied )

aws s3 cp s3://source-bucket-name/file.txt s3://dest-bucket-name/ – acl public-read-write

There are 5 types of ACL permissions available with S3 which are listed here on the following snapshot.

Hope this helps.

How to copy files between EC2 and S3

We have so far discussed how to copy files from local to EC2 and vice versa.

When it comes to EC2 instances it is a little different as the authentication can be taken care of by IAM roles assigned to the server.

S3 buckets can also have certain bucket policies to allow servers coming from a certain AWS account of VPC endpoint etc.

We have created an article that talks about how to easily copy files between EC2 and S3 with a simple 4 step configuration.

How to copy files with Sync - AWS S3 Sync

So if you are looking for advanced options while copying files like excluding the already existing files or deleting the existing files etc

In the Linux world, we use rsync rather than scp for this task and it is faster too.

likewise, we have a dedicated command for aws s3. aws s3 sync

we have a dedicated article on aws s3 sync. take a look

Automate S3 tasks with Ansible

We have an exclusive article covering various examples of Ansible S3 module usage and examples.

If you are looking for some automation with S3. I would recommend you to give it a try.

How to use ansible with S3 - Ansible aws_s3 examples | Devops Junction

If you have any feedback or best practices. please feel free to comment and let us know.

Cheers

Hanumanth