The Beautiful thing about Digital world is that we have a Ctrl+z button, snapshots and backups to go back in time and correct our mistakes, which is not possible in our real life.

Enough with a philosophy.

I would not have to tell you how crucial backups are and when it comes to database backups, they hold utmost importance. Because they are like a time machine in Infrastructure and essential for Disaster recovery and rollback.

Objective

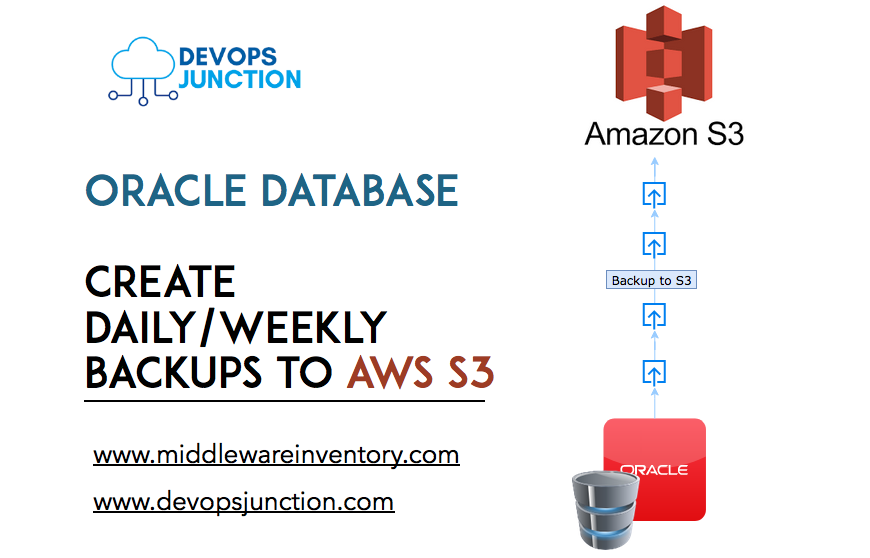

In this article, we are going to see how to take a backup of Oracle database Schema using expdp command and upload the backup to AmazonS3

We will be discussing how to install AWS S3 CLI and how to integrate your Oracle DB server to S3 to upload the backup.

How to schedule and automate the Oracle database backup process at daily, weekly interval using crontab.

Our Oracle Database backup to S3 script also comes with Email notification. If you have SMTP configured in your DB server. you can get notifications upon successful backup completion or in case of any failure.

This article assumes that your Oracle DB is hosted and running in Linux

Let us go into the Subject.

Oracle Database backup to S3 - How to Setup

If your Oracle DB is hosted in AWS as a self-managed EC2 instance. you can easily integrate the EC2 and S3 and copy the files back and forth with help of IAM roles

So it makes it easy for you to copy the backup files to S3 bucket without any programmatic access

If not, you have to install the AWS CLI in your On-Prem Linux instance and use the S3 bucket endpoint of your Amazon S3 bucket and keep your API Keys on the server to be able to log in to S3

Step1: Enabling S3 connectivity

To be able to copy files (upload) from Linux machine to S3 bucket, you can use aws s3 cli commands and our script is designed with the same command.

For the aws s3 cli command to work and to be able to connect to your AWS account, you must have proper authentication configured in your Linux instance.

If your Oracle runs in EC2 Linux on AWS, this can be achieved with IAM roles, Otherwise you can use the API key and the secret to make it work.

These are the steps to be followed when your Oracle DB is hosted in AWS

Click on each step to read more about it.

- Create an IAM role with S3 write access or admin access

- Map the IAM role to an EC2 instance

- Install AWS CLI in EC2 instance

- Run the AWS s3 cp command to copy the files to the S3 bucket ( to test )

When your Oracle is hosted On-Prem outside of AWS, follow these steps

- Setup your Programmatic Access – Access Key and Secret for AWS

- Install AWS CLI

- Configure AWS CLI

- Run the AWS s3 cp command to copy the files to the S3 bucket ( to test )

I assume that you have tested your S3 connectivity and able to copy files from your Oracle Server to S3 bucket, let us move on to the next step.

Step2: Creating the Script with expdp to backup the Database

SSH to the EC2 server where Oracle DB is running and switch to the oracle user account

Copy the below shell script to the Oracle DB server to your desired directory, in my case it is /home/oracle/scripts

#!/bin/bash

# Author: Sarav

# website: https://middlewareinventory.com

# How to execute this script

# /home/oracle/scripts/backup_script.sh > /tmp/backupexec.log 2>&1

# Do not change the output log file name or location /tmp/backupexec.log as it is referred with in the script

#

BASEDIR=`dirname $0`

export DATE=$(date +%m_%d_%y_%H_%M)

export ORACLE_HOME=/app/u01/app/oracle/product/12.2.0.1/dbhome_1

SCHEMA_NAME="DEV_GRITFY"

cd $BASEDIR

echo "Starting to backup a Schema $schemaname"

$ORACLE_HOME/bin/expdp "'/ as sysdba'" directory=datapump schemas=$SCHEMA_NAME dumpfile=$SCHEMA_NAME_$DATE.dmp logfile=$SCHEMA_NAME_$DATE.log flashback_time=systimestamp exclude=statistics PARALLEL=20 COMPRESSION=ALL > $BASEDIR/BackupExec$DATE.log 2>&1

if [ $? -eq 0 ];

then

echo "Successfully completed the Backup"

else

echo "Error during the Backup. Need Attention"

cat /tmp/backupexec.log|mailx -s "ALERT: Oracle DB Backup Failed" -r "Gritfy Infra<[email protected]>" [email protected]

exit 127

fi

echo "Processing the Created dump and Log file"

# this is the actual location of datapump directory specified in the expdp command

cd /app/u01/dp_imp_dir

# Creating a TAR ball with the log and the dmp file

tar cvfz $SCHEMA_NAME_$DATE.tar.gz $SCHEMA_NAME_$DATE.dmp $SCHEMA_NAME_$DATE.log

echo "Moving the Tar file to S3 Bucket"

/usr/local/bin/aws s3 mv $SCHEMA_NAME_$DATE.tar.gz s3://gritfy-db-backups/Oracle/

if [ $? -eq 0 ]

then

echo "Backup has been successfully moved to S3"

cat /tmp/backupexec.log|mailx -s "INFO: Oracle DB Backup Successful" -r "Gritfy Infra<[email protected]>" [email protected]

else

echo "Failure during the S3 Upload"

cat /tmp/backupexec.log|mailx -s "ALERT: Oracle DB Backup Failed" -r "Gritfy Infra<[email protected]>" [email protected]

exit 128

fi

# Remove the tar, dump and log files for healthy disk space after successful S3 backup

rm -f $SCHEMA_NAME_$DATE.tar.gz $SCHEMA_NAME_$DATE.dmp $SCHEMA_NAME_$DATE.log

Make sure you update the ORACLE_HOME variable in the script with your valid oracle home

we are using the Oracle expdp command to backup/export the database and it can be later imported using impdp this is an easiest way of taking a backup of your Oracle DB.

But the problem is that the expdp does a full a backup of schema all the time and it is not incremental backup or restore.

You can also choose which schema you want to be backed up and I have few mandatory/best practice options with this command like directory, parallel etc

You can read more about them in Oracle expdp command manual.

$ORACLE_HOME/bin/expdp "'/ as sysdba'" directory=datapump \ schemas=DEV_GRITFY \ dumpfile=DEV_GRITFY_$DATE.dmp \ logfile=DEV_GRITFY_$DATE.log \ flashback_time=systimestamp \ exclude=statistics \ PARALLEL=20 \ COMPRESSION=ALL > $BASEDIR/BackupExec$DATE.log 2>&1

the export dump and log file would be created on the directory you defined with directory=datapump here datapump is the logical name representing the physical path on the server. in my case it is /app/u01/dp_imp_dir

Once the backup completed successfully, we would cd to this datapump directory and execute the aws s3 mv command to move(upload) the files to S3

/usr/local/bin/aws s3 mv DEV_GRITFY_$DATE.tar.gz s3://gritfy-db-backups/Oracle/

Make sure you create your own S3 bucket and replace the path in the preceding snippet and the script before using

Note*

The script is designed to send emails for both success and failure.

You can optionally remove the lines with

mailxif you do not want the mail notifications.To be able to send emails from your Linux server, You can set up your own SMTP relay using Postfix and Office365.

Step3: Test the script manually

Before scheduling this script into Crontab for an automated daily backup. I recommend you to execute the script and validate if it works fine without any issues.

execute the script as mentioned within the script header

/home/oracle/scripts/backup_script.sh > /tmp/backupexec.log 2>&1

to track the progress, refer the expdp out log defined ( would be created in the datapump directory with a schema name prefix)

Step4: Schedule the script for Daily/Weekly Backup with Cron

If you have tested your script manually and found it to working precisely, You can go ahead and schedule this script to run every day during non-business hours or weekly, bi-weekly based on your requirement

This would automate your oracle database backup to S3 requirement.

In my case, I am scheduling this daily backup to run every day at 2 am.

Here is what I had to do to create a crontab entry.

echo "00 2 * * * /home/oracle/scripts/backup_script.sh > /tmp/backupexec.log 2>&1" | crontab -

execute the preceding command as an oracle user after making necessary modification ( if need be)

To validate if it is scheduled. use the crontab -l command to list

You can use crontab.guru if you have any question on the crontab syntax

Conclusion

Hope this article helps you to set up an automated Oracle database backup to S3.

This script can serve as a minimal template or meet your full requirement. You can make changes as per your need

I would really advise you to execute this script (or) backup setup in DEV environment first before moving to production.

If you have any questions or any inputs to improve the script or this article. Please let me know in comments.

Cheers

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content